001 : Fashion MNIST dataset training using PyTorch

This project is a part of the Bertelsmann Tech Scholarship AI Track Nanodegree Program from Udacity. In this project, we are going to use Fashion MNIST data sets, which is contained a set of 28X28 greyscale images of clothes. Our goal is building a neural network using Pytorch and then training the network to predict clothes. This trained network will return a probability for 10 classes of clothes shown in images.

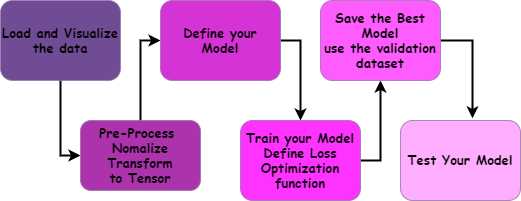

Let’s write down a route map to follow

- Load and visualize the data

- Pre-Process your data (Transform: Normalization, Converting into tensor)

- Define your model using Pytorch

- Training the model

- Save the Best model: find the best model using the validation dataset

- Test out your model

Importing Libraries

torch.optimimplement various optimization algorithms like SGD and Adam.torch.nn.functionalfor non-linear activation functions like relu, softmin, softmax, logsigmoid, etc.- The torchvision packages consist of popular datasets, model architectures, and common image transformations for computer vision. We are using datasets and transform from torchvision to download a fashion-MNIST dataset and transforms an image using compose transformations.

- Subset RandomSampler used to split the dataset into train and validation subsets for validation of our model.

Download Datasets and Transform Images

- Extract — Get the Fashion-MNIST image data from the source

- Transform — Put our data into a tensor form.

- Load — Put our data into an object to make it easily accessible.

transforms.Composecreates a series of transformation to prepare the dataset.transforms.ToTenserconvert PIL image(L, LA, P, I, F, RGB, YCbCr, RGBA, CMYK, 1) or numpy.ndarray (H x W x C) in the range [0, 255] to a torch.FloatTensor of shape (C x H x W) in the range [0.0, 1.0].transform.NormalizeNormalize a tensor image with mean and standard deviation. Tensor image size should be (C x H x W) to be normalized which we already did use the transforms.ToTenser.datasets.FashionMNISTto download the Fashion MNIST datasets and transform the data.The train paremeter set the True (train=True) to get trained dataset, otherwise set the parameter False for the test dataset.torch.utils.data.Dataloadertakes our data train or test data with parameter batch_size and shuffle. batch_size define the how many samples per batch to load, and shuffle parameter set the True to have the data reshuffled at every epoch.

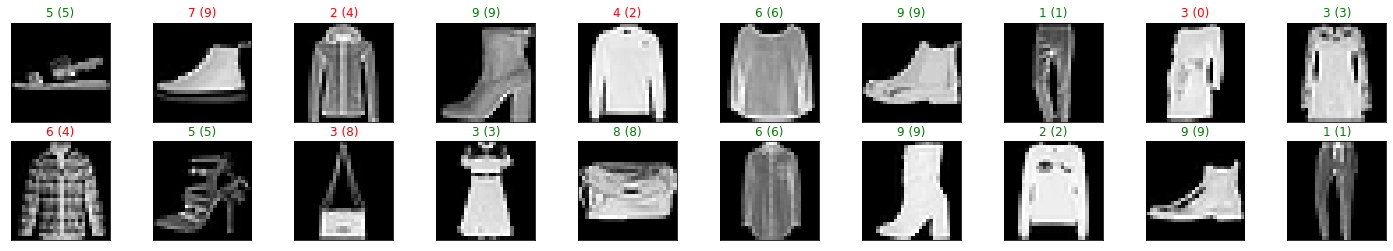

Visualize a Batch of Training Data

Building The Network

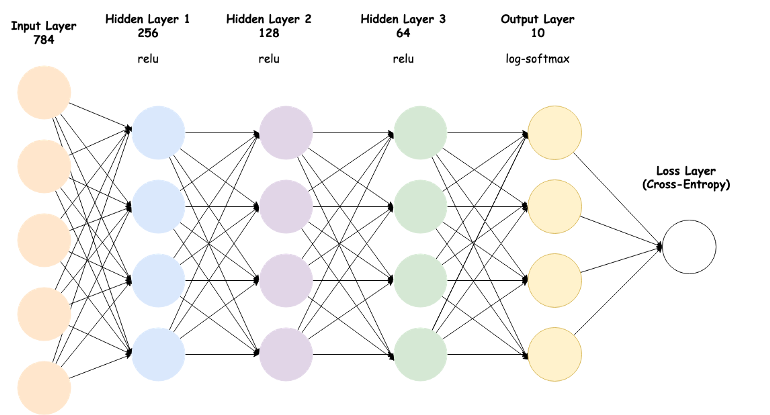

Our images are 28x28 2D tensors, so we need to convert them into 1D vectors. 784 is 28 times 28, so, this is typically called flattening, we flattened the 2D images into 1D vectors. This is our input layer and here we need to 10 output layers for the classification of the clothes.

While we are defining the hidden layers, we are able to choose the arbitrary number. But this selection directly affects our neural network performance. We should modify the number to find out an optimized model for our image classification problem.

- Defining our Neural Network (NN)architectures using the python class.

- PyTorch provides a

nn.Modulethat building neural networks. super().__init__()this creates a class that tracks the architecture and provides a lot of useful methods and attributes.- In NN architecture, we defined 3 hidden layers and 1 output layer.

self.fc1 = nn.Linear(784, 256): This line creates a module for a linear transformation, xw+b, with 784 inputs and 256 outputs for first hidden layer and assigns it to self.fc1. The module automatically creates the weight and bias tensors which we'll use in the forward method.- Similarly, the following lines create another linear transformation with 256 inputs and 128 output and so on.

- In forward model, we take tensor input x to change its shapes to our batch size using x.shape[0] , the -1 fill out the second dimension.

- Then, we could pass thought operations that we defined in __init__. The input layer goes through the hidden layer with a together dropout and RELU activation function then reassign it to the x.

- For the output layer, we pass through the Log Softmaxfunction to obtain the log-probabilities in neural-network.

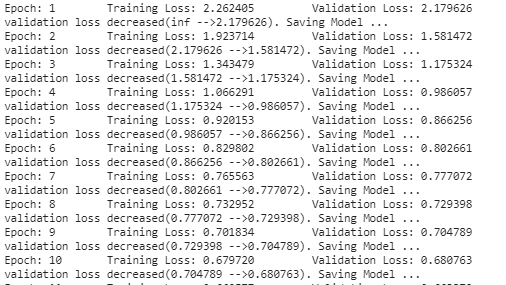

The training pass process is as follow:

model = Classifier():This line is actually create our model.- Defined the criterion with Negative log-likelihood loss and also defined our optimizer (SGD)to optimize our model’s parameter when we loop through the dataset using epochs

- We are going to track running loss and validation loss for each epoch to see the evaluation of our model.

- For raining the data we set the model in train mode: model.train(). Then loop over the “trainloader” to extract images and labels from a train data. The following process shows the train pass.

zero_grad(): Clear the gradients of all optimized variables

log_ps = model(images): Make a forward pass through the network to getting log probabilities bypassing the images to the model.

loss = criterion(log_ps, lables): Use the log probabilities (log_ps) and labels to calculate the loss.

loss.backward(): Perform a backward pass through the network to calculate the gradients for model parameters.

optimizer.step(): Take a step with the optimizer to update the model parameters.

- We need to use validation data to know when to stop training the model. So, we have validatiın batch that loop over the validation data and labels.

- In validation batch, we apply the model and calculate the loss for the validation data set. We are also going to trach the validation loss. We need to stop training whenever train loss decrease but validation loss does not.

- Print out the average training loss and validation loss, and then the model is going to save whenever the calculated validation loss is smaller than the saved validation loss.

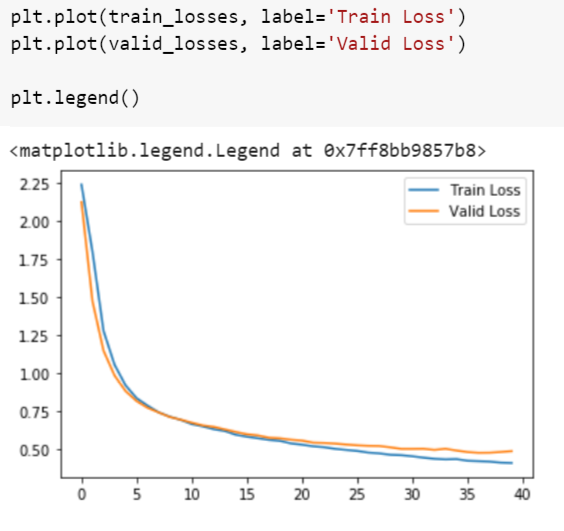

We keep tracking the validation loss and train loss to investigate the averages values over time. The following plot shows averages values for train loss and validation loss which calculated for each epoch.

Test the Trained Network

Finally, we test our best model on previously unseen test data. Testing on unseen data is a good way to check that our model.

So, we come to the end. We would like the see how our model performs. For this, we are going the print out the accuracy of our model

Test Loss: 0.445839Test Accuracy of 0: 85% (859/1000)

Test Accuracy of 1: 95% (954/1000)

Test Accuracy of 2: 75% (752/1000)

Test Accuracy of 3: 86% (865/1000)

Test Accuracy of 4: 76% (766/1000)

Test Accuracy of 5: 90% (906/1000)

Test Accuracy of 6: 48% (482/1000)

Test Accuracy of 7: 91% (911/1000)

Test Accuracy of 8: 95% (953/1000)

Test Accuracy of 9: 93% (938/1000)

Test Accuracy (Overall): 83% (8386/10000)

Then visualize the data to displays test images and their labels in the following format: predicted (ground-truth). The text will be green for accurately classified examples and red for incorrect predictions.

Finally, completed the train and test our neural network. This project shows the road map for the basic neural network using Pytorch. Thank you so much Udacity and Bertelsmann to reach out to these courses. For the Note, I am still learner so, please let me know any additional information.

Follow me on Twitter